|

|

@@ -16,7 +16,7 @@

|

|

|

\end{frame}

|

|

|

}

|

|

|

|

|

|

-\title{Databases 2 - Optional Paper Presentation}

|

|

|

+\title{Databases 2 - Optional Presentation}

|

|

|

\author{Andrea Gussoni}

|

|

|

\institute{Politecnico di Milano}

|

|

|

\date{July 15, 2016}

|

|

|

@@ -37,22 +37,22 @@

|

|

|

\end{frame}

|

|

|

|

|

|

\begin{frame}

|

|

|

-\frametitle{The Starting Problem}

|

|

|

+\frametitle{The Problem}

|

|

|

At the present time, Database Systems in a distributed scenario are increasingly common. This means that the task of coordinating different entities is assuming a lot of importance.

|

|

|

\end{frame}

|

|

|

|

|

|

\begin{frame}

|

|

|

-\frametitle{The Starting Problem}

|

|

|

+\frametitle{The Problem}

|

|

|

Usually \textbf{concurrency control} protocols are necessary because we want to guarantee the consistency of the application level data through the use of a database layer that check and solve the possible problems and conflicts. An example can be the use of a 2PL serialization technique that is often used in commercial DBMS.

|

|

|

\end{frame}

|

|

|

|

|

|

\begin{frame}

|

|

|

-\frametitle{The Starting Problem}

|

|

|

+\frametitle{The Problem}

|

|

|

Mixing this with a distributed scenario means the necessity to introduce complex algorithms (such as 2PC) that coordinate the various entities involved in the transactions, introducing latency. Coordination also means that we cannot exploit all the parallel resources of a distributed environment, because we have a huge overhead introduced by the coordination phase.

|

|

|

\end{frame}

|

|

|

|

|

|

\begin{frame}

|

|

|

-\frametitle{The Starting Problem}

|

|

|

+\frametitle{The Problem}

|

|

|

Usually we pay coordination overhead in term of:

|

|

|

\begin{itemize}

|

|

|

\item Increased latency.

|

|

|

@@ -61,6 +61,15 @@ Usually we pay coordination overhead in term of:

|

|

|

\end{itemize}

|

|

|

\end{frame}

|

|

|

|

|

|

+\begin{frame}

|

|

|

+\frametitle{The Problem}

|

|

|

+\begin{figure}

|

|

|

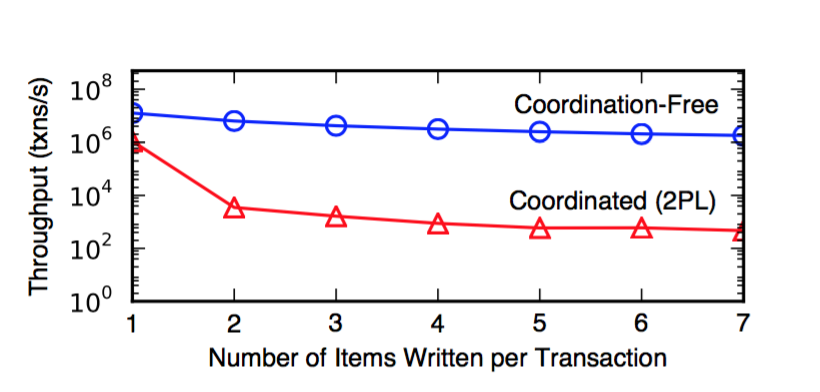

+\caption{Microbenchmark performance of coordinated and coordination-free execution on eight separate multi-core servers.}

|

|

|

+\centering

|

|

|

+\includegraphics[width=0.85\textwidth,height=0.60\textheight]{2pl-free}

|

|

|

+\end{figure}

|

|

|

+\end{frame}

|

|

|

+

|

|

|

\begin{frame}

|

|

|

\frametitle{Invariant Confluence}

|

|

|

The authors of the paper discuss this new technique (or better analysis framework) that if applied, it will reduce in a considerable way the need of coordination between the Database entities, reducing the cost in terms of bandwidth and latency, increasing considerably the overall throughput of the system.

|

|

|

@@ -122,7 +131,7 @@ Luckily there are some standard situations for the analysis of invariants that w

|

|

|

|

|

|

\begin{frame}

|

|

|

\frametitle{Benchmarking}

|

|

|

-In the next few slides there are some plots of the result obtained in the benchmarks by the authors. The New-Order label refers to the fact that the authors when an unique id assignment was needed, they assigned a \emph{temp-ID}, and only just before the commit a sequential \emph{real-ID} was assigned, and a table mapping \emph{tmp-ID} to \emph{real-ID} created.

|

|

|

+In the next few slides there are some plots of the results obtained by the authors. The \textbf{New-Order} label refers to the fact that the authors, when an unique id assignment was needed, decided to assign a \emph{temp-ID}, and only just before the commit, a sequential (as required from the specifications of the benchamrk) \emph{real-ID} is assigned, and a table mapping \emph{tmp-ID} to \emph{real-ID} is created.

|

|

|

\end{frame}

|

|

|

|

|

|

\begin{frame}

|

|

|

@@ -245,7 +254,7 @@ Hekaton utilizes optimistic multi-version concurrency control (MVCC), it mainly

|

|

|

\begin{frame}

|

|

|

\frametitle{Takeaways}

|

|

|

\begin{itemize}

|

|

|

- \item We nedd a new phase called \textbf{validation}, that checks just before a commit action that all the records used during the transactions still exist, are valid or are have not been modified.

|

|

|

+ \item We need a new phase called \textbf{validation}, that checks just before a commit action that all the records used during the transactions still exist, are valid and have not been modified by another concurrent transaction.

|

|

|

\item There is \textbf{no deletion} in the strict sense of the term. The to-delete records have they end timestamps changed, and the garbage collection remove the unused records (when all the transactions alive begun after the deletion).

|

|

|

\end{itemize}

|

|

|

\end{frame}

|

|

|

@@ -322,7 +331,7 @@ There is related research in progress in this direction:

|

|

|

\item \textbf{HyPer:} hybrid between OLTP and OLAP, optimizes data in chunks using different virtual memory pages, finds the cold data and compress for OLAP usage.

|

|

|

\end{itemize}\pause

|

|

|

\begin{block}{}

|

|

|

- The approach used in Siberia has the great advantage to have an access at record level, and for databases where the cold storage in between 10\% and 20\% it has the great advantage of not requiring additional structures in memory (except the compact bloom-filters) for the cold data.

|

|

|

+ The approach used in Siberia has the great advantage to have an access at record level, and for databases where the cold storage is between 10\% and 20\% of the whole database it has the great advantage of not requiring additional structures in memory (except the compact bloom-filters) for the cold data.

|

|

|

\end{block}

|

|

|

\end{frame}

|

|

|

|